Twitter-Hacking using Twint 🦅

- alvin gitonga

- Jul 30, 2022

- 4 min read

Welcome back Cyber warriors 💪. Today, Twitter!

🤌 On September 7, 2011, Twitter announced that it had 100 million active users logging in at least once a month and 50 million active users every day. On March 31, 2014, Twitter announced that there were 255 million monthly active users (MAUs) and 198 million mobile MAUs 🤭.As of Q1 2019, Twitter had more than 330 million monthly active users 🙆♀️.

Today we will using a tool Twint, to scrape info on anyone we want on twitter, all we will need is their username 👌 and we can access their entire twitter history without using twitter or needing to have an account whatsoever 🌹

Twint utilizes Twitter’s search operators to let you scrape Tweets from specific users 😲, scrape Tweets relating to certain topics, hashtags & trends, or sort out sensitive information from Tweets like e-mail and phone numbers 🕵️♂️ . I find this very useful, and you can get really creative with it too. Twint also makes special queries to Twitter allowing you to also scrape a Twitter user's followers, Tweets a user has liked, and who they follow without any authentication, API, Selenium, or browser emulation 🥷

Some of the benefits of using Twint vs Twitter API 🤌:

Can fetch almost all Tweets (Twitter API limits to last 3200 Tweets only);

Fast initial setup;

Can be used anonymously and without Twitter sign up;

No rate limitations.

So Cyber Morans, fire up your Kali Linux boxes and lets get cracking 💪

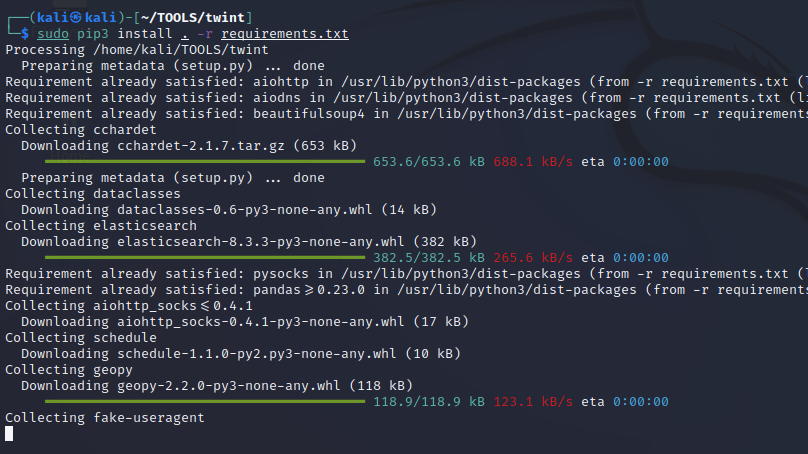

Installation 🚀

Open a new terminal on your linux machine and run:

git clone --depth=1 https://github.com/twintproject/twint.gitafter the download in complete; cd twint to get into the folder then,

pip3 install . -r requirements.txt

or

pip3 install twintCLI Use: Basic Examples and Combos 🦉

👉twint -u username - Scrape all the Tweets of a user (doesn't include retweets but includes replies).

👉twint -u username -s pineapple - Scrape all Tweets from the user's timeline containing pineapple.

👉twint -s pineapple - Collect every Tweet containing pineapple from everyone's Tweets.

👉twint -u username --year 2014 - Collect Tweets that were tweeted before 2014.

👉twint -u username --since "2015-12-20 20:30:15" - Collect Tweets that were tweeted since 2015-12-20 20:30:15.

👉twint -u username --since 2015-12-20 - Collect Tweets that were tweeted since 2015-12-20 00:00:00.

👉twint -u username -o file.txt - Scrape Tweets and save to file.txt.

👉twint -u username -o file.csv --csv - Scrape Tweets and save as a csv file.

👉twint -u username --email --phone - Show Tweets that might have phone numbers or email addresses.

👉twint -s "NelsonHavi" --verified - Display Tweets by verified users that Tweeted about Nelson Havi.

.

👉twint -g="48.880048,2.385939,1km" -o file.csv --csv - Scrape Tweets from a radius of 1km around a place in Paris and export them to a csv file.

👉twint -u username -es localhost:9200 - Output Tweets to Elasticsearch

👉twint -u username -o file.json --json - Scrape Tweets and save as a json file.

👉twint -u username --database tweets.db - Save Tweets to a SQLite database.

👉twint -u username --followers - Scrape a Twitter user's followers.

👉twint -u username --following - Scrape who a Twitter user follows.

👉twint -u username --favorites - Collect all the Tweets a user has favorited (gathers ~3200 tweet).

👉twint -u username --following --user-full - Collect full user information a person follows

👉twint -u username --timeline - Use an effective method to gather Tweets from a user's profile (Gathers ~3200 Tweets, including retweets & replies).

👉twint -u username --retweets - Use a quick method to gather the last 900 Tweets (that includes retweets) from a user's profile.

👉twint -u username --resume resume_file.txt - Resume a search starting from the last saved scroll-id.

👉twint -u noneprivacy --csv --output none.csv --lang en --translate --translate-dest it --limit 100 - get 100 english tweets and translate them to italian.

Twitter can shadow-ban accounts, which means that their tweets will not be available via search. To solve this, pass --profile-full if you are using Twint via CLI or, if are using Twint as module, add config.Profile_full = True. Please note that this process will be quite slow.

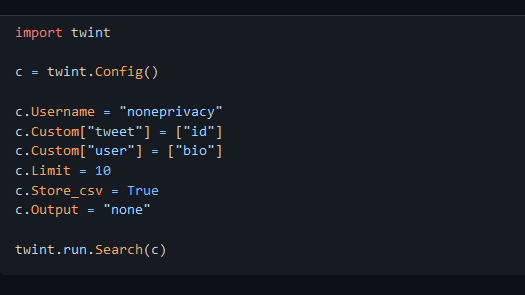

Twint can be imported as a library on a python script to fully automate this process. Lemme show you.

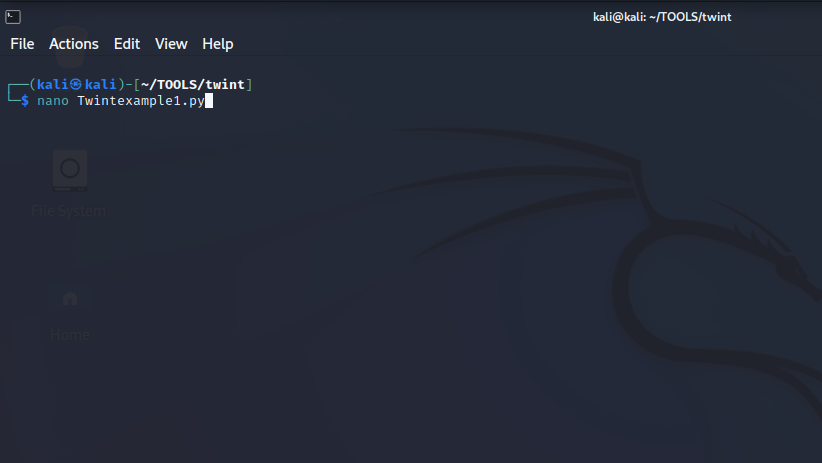

still on that terminal, type nano twintexample.py and hit enter;

This opens a text editor (nano) where we will write some python 🐍 code 👇

when you hit enter 👇

Now lets write some python 🐍 code; We will be searching a web developer and tech memelord on twitter kirinyetbrian and we will be looking for all his tweets with the term 'dev' in them.

PS: 🔥 You can use any twitter username you want as long as you have permission. This is because this tool will scrape tweets from private and none private users as well those that have blocked you 🔥

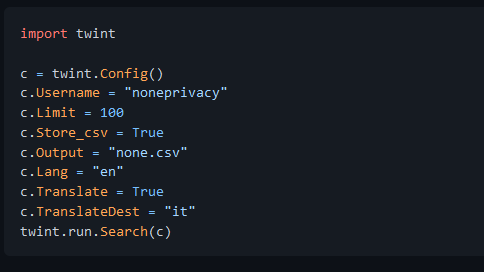

We will import twint and use variable 'c', assign that to the twint.Config() function (It's in the twint lib we imported first) so to assign the script's search parameters (as args) and then call the function at the end. dont forget the (c) - these are the function's arguments.

ctrl + x to exit nano

y then enter to save script

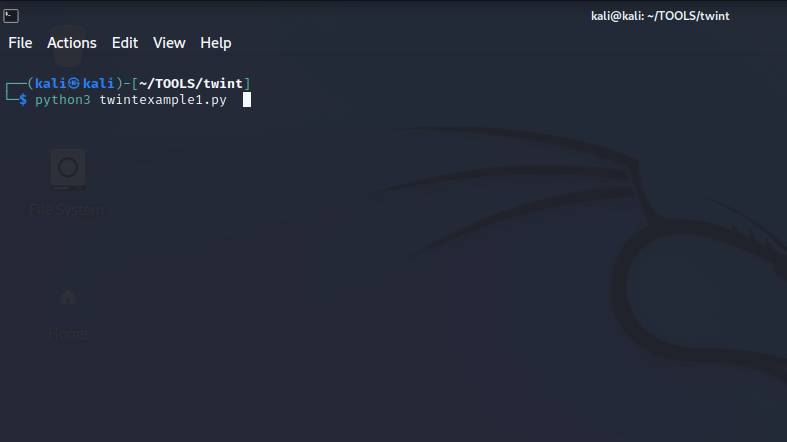

Now lets run it 👉 python3 Twintexample1.py👇;

Unfortunately ⛈️, i cannot show the results here since I DONT have Brian's permission. However, run it yourself, use it on other users and even enhance the script to do more than that. You can get creative with this tool like using it to track insightment of violence or continue to monitor people who have blocked or are private.

I personally use it to look for events using a key word like 'hatupangwingwi' to track UDA campaigns across twitter (purely for oversight) or search key phrases to uncover malicious campaigns like fake forex exchange promotions, trace and track misinformation campaigns etc. Twint's power is your imagination. Try more and have fun with other 🐍 scripts like;

This outputs the results into a .csv file 👆

This translates the first 100 tweets from English to Italian 👆

Conclusion

Twint can be used to scrape information on twitter regardless their privacy settings. It can be useful to relentlessly pursue some malicious activities, by law enforcement 👮♂️ to try and gather information, hold hate-speech accountable, trace the origins 🕵️♀️ and spread of misinformation ...etc.

Thank you for your time, Like and leave a comment/review and as always. I will see you in the next one but till then, stay awesome! 👊 💪

Comments